[I have been relatively quiet recently because I am in Lithuania working flat out to liberate Crystallographic Data and make it Open – expect several posts in the near future.]

Seven years ago I approached SPARC to suggest that together we ran an “Open Data” mailing list – it was one of the times the term “Open Data” had been used – now it’s everywhere, of course. I’m delighted to repost the following item from the list:

https://groups.google.com/a/arl.org/forum/#!topic/sparc-opendata/b2Qkwx5K-nA

In essence it says that the US is going to make Open Data in science actually happen. My thanks to SPARC and many others who have pushed the cause. There’s a lot more that needs to happen but notice the clause allowing Content Mining which I

have highlighted.

For Immediate Release

Thursday, January 16, 2014

Contact: Ranit Schmelzer

PUBLIC ACCESS TO SCIENTIFIC RESEARCH ADVANCES

Omnibus Appropriations Bill Codifies White House Directive

Washington, DC – Progress toward making taxpayer-funded scientific research freely accessible in a digital environment was reached today with congressional passage of the FY 2014 Omnibus Appropriations Act. The bill requires federal agencies under the Labor, Health and Human Services, and Education portion of the Omnibus bill with research budgets of $100 million or more to provide the public with online access to articles reporting on federally funded research no later than 12 months after publication in a peer-reviewed journal.

“This is an important step toward making federally funded scientific research available for everyone to use online at no cost,” said Heather Joseph, Executive Director of the Scholarly Publishing and Academic Resources Coalition (SPARC). “We are indebted to the members of Congress who champion open access issues and worked tirelessly to ensure that this language was included in the Omnibus. Without the strong leadership of the White House, Senator Harkin, Senator Cornyn, and others, this would not have been possible.”

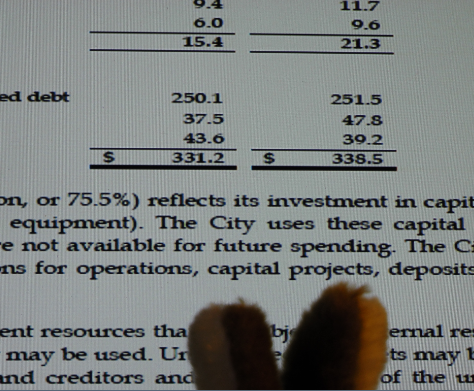

The additional agencies covered would ensure that approximately $31 billion of the total $60 billion annual US investment in taxpayer funded research is now openly accessible.

SPARC strongly supports the language in the Omnibus bill, which affirms the strong precedent set by the landmark NIH Public Access Policy, and more recently by the White House Office of Science and Technology Policy (OSTP) Directive on Public Access. At the same time, SPARC is pressing for additional provisions to strengthen the language – many of which are contained in the Fair Access to Science and Technology Research (FASTR) Act – including requiring that articles are:

· Available no later than six months after publication;

· Available through a central repository similar to the National Institutes for Health’s (NIH) highly successful PubMed Central, a2008 model that opened the gateway to the human genome project and more recently the brain mapping initiative. These landmark programs demonstrate quite clearly how opening up access to taxpayer funded research can accelerate the pace of scientific discovery, lead to both innovative new treatments and technologies, and generate new jobs in key sectors of the economy; and

· Provided in formats and under terms that ensure researchers have the ability to freely apply cutting-edge analysis tools and technologies to the full collection of digital articles resulting from public funding.

“SPARC is working toward codifying the principles in FASTR and is working with the Administration to use PubMed Central as the implementation model for the President’s directive,” said Joseph. “Only with a central repository and the ability to fully mine and reuse data will we have the access we need to really spur innovation and job creation in broad sections of the economy.”

Background

Every year, the federal government uses taxpayer dollars to fund tens of billions of dollars of scientific research that results in thousands upon thousands of articles published in scientific journals. The government funds this research with the understanding that it will advance science, spur the economy, accelerate innovation, and improve the lives of our citizens. Yet most taxpayers – including academics, students, and patients – are shut out of accessing and using the results of the research that their tax dollars fund, because it is only available through expensive and often hard-to-access scientific journals.

By any measure, 2013 was a watershed year for the Open Access movement: in February, the White House issued the landmark Directive; a major bill, FASTR, was introduced in Congress; a growing number of higher education institutions – ranging from the University of California System, Harvard University, MIT, the University of Kansas, and Oberlin College – actively worked to maximize access to and sharing of research results; and, for the first time, state legislatures around the nation have begun debating open access policies supported by SPARC.

Details of the Omnibus Language

The Omnibus language (H.R. 3547) codifies a section of the White House Directive requirements into law for the Department of Labor, Health and Human Services, the Centers for Disease Control (CDC), the Agency for Healthcare Research and Quality (AHRQ), and the Department of Education, among other smaller agencies.

Additional report language was included throughout the bill directing agencies and OSTP to keep moving on the Directive policies, including the US Department of Agriculture, Department of the Interior, Department of Commerce, and the National Science Foundation.

President Obama is expected to sign the bill in the coming days.

###

SPARC®, the Scholarly Publishing and Academic Resources Coalition, is an international alliance of academic and research libraries working to correct imbalances in the scholarly publishing system. Developed by the Association of Research Libraries, SPARC has become a catalyst for change. Its pragmatic focus is to stimulate the emergence of new scholarly communication models that expand the dissemination of scholarly research and reduce financial pressures on libraries. More information can be found atwww.arl.org/sparc and on Twitter @SPARC_NA.