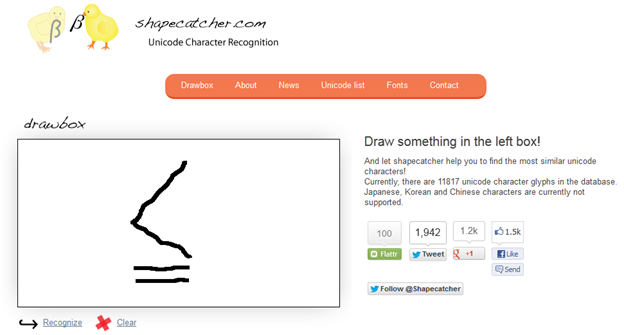

I have been invited to speak to a group of Librarians and Researchers (2012-11-15) by Constance Wiebrands of Edith Cowan University (http://www.ecu.edu.au/ )(? at their Mount Lawley Campus. ) This a great honour – to be invited all across the continent – and I am working out what I shall say. I want to give an idea of the potential for Open Scholarship but also have to make it clear that this needs positive and courageous action. First an (Australian) picture – I’ll explain later …

Male Southern Elephant Seals fighting on Macquarie Island for the right to mate (from http://en.wikipedia.org/wiki/Sexual_selection ).

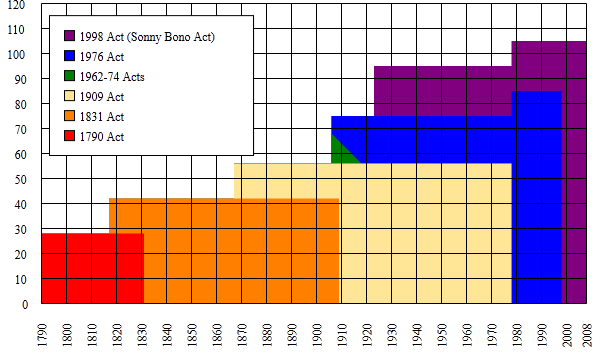

The creation of knowledge in academia (a general term for Universities, Colleges, Research Institutions, Charities) is a major global business. I have been unable to get exact figures (and they will depend on definitions) but the annual cost of publicly funded STM (Scientific Technical Medical) research is about

300 BILLION DOLLARS

The error margin is probably a factor of 3 (so it doesn’t matter whether they are AU or US Dollars, Euros or Pounds). This comes from Research funders (government, charities, foundations and trusts and industry (where the results are intended to be public)). I’d welcome better figures – I have guestimated by 2-3 methods and they end up in the 100-1000 Billion dollar range.

A more accurate figure is the scholarly publishing industry (journals, textbooks, electronic services, etc.) at about

15 BILLION DOLLARS

This is a very profitable industry (the costs of raw materials are near zero – donated by academics – the cost of services are low – donated by academics – and profits can be large (15-40 %). It’s an unregulated industry, with no price or quality control other than by internally and non-transparently by individual publishers. There is no direct price competition as one good is not substitutable by another (a paper in Nature and a paper in Acta Cryst E are both unique and non-equivalent in terms of their knowledge). There *is* a cumulative price limit in that most of the income is provided by University subscriptions to journals. Over the last decade this was funded on a significant annual increase in real terms (perhaps 8%) in library payments but there is public agreement that this cannot and will not continue. Subscribers have to make impossible decisions.

Similarly the consumers (“readers”) have no financial involvement. An STM scholar (I’ll probably lapse into calling them scientist) does not actually pay for the goods (most of which are anyway rented) but puts internal pressure on the University/Library to prefer their requirements to their colleagues. This is not related to the value of the goods but internal political power. The effect of this process is that some subscriptions are cancelled but no market pressure is put on the value and quality of the individual subscriptions. If a powerful researcher says they “must have” subscription X1, then publisher X can charge more or less what they want. There is very little collective bargaining where the subscribers as a whole unite to challenge prices and quality.

This is already a fragile and dysfunctional market, but there are more problems. In a similar way that derivatives can influence sums far larger than the investment, the publication market affects decisions at the funding level. The STM meta-publishing market (e.g. citation indexes) has evolved not simply as a factual record of bibliographic citations but also as a means of evaluating the worth of a publication. I don’t know what the metapublishing market is but let’s say it’s part of the 15 Billion so

3 BILLION DOLLARS

The metapublishing market is now used to control decisions in the funding of STM. By creating numerical values for individuals, departments, institutions and even whole subject areas, decisions can be made simply by running computation. For example the Australian ERA exercise (http://www.timeshighereducation.co.uk/story.asp?storycode=411286 , now scrapped) is being followed by QMC London (http://qmucu.wordpress.com/2012/02/04/save-sbcs/ ) rely heavily on numbers. They include tables such as:

_______________________

[1] The proposed redundancy criteria relate to the period Jan 2008 to Dec 2011, during which many staff were told to concentrate on teaching. These criteria are based on the median achieved by the top 20% of UK researchers; that is, half of the researchers in the top fifth of the country failed to achieve these targets.

|

Category of staff

|

No. of Papers

|

No. of high quality papers [2]

|

Income (£)

|

PhD studentships

|

|

Biology and Chemistry Professor

|

13

|

3

|

500000 at least 200000 as PI

|

2

|

|

Reader

|

10

|

2

|

375000 at least 150000 as PI

|

2

|

|

Senior Lecturer

|

8

|

2

|

300000 at least 120000 as PI

|

1

|

In other words if a researcher does not publish a given amount of given measured quality and attracts a given income each year they will be fired.

The problem with numbers is not only that they are simplistic but that they also depend on who compiles them. If the term “high quality paper” is to be used then it might be expected that it would be controlled by universities, or funders or a public council or…

But in fact the numbers are created by a non-transparent process by commercial organizations whose only motive is to maximize profit (simplistically income over costs). This means selling as much metadata as possible for the highest prices and making the costs as low as possible (in this case by automation). In essence academic and funding decisions are taken by using numbers created by Elsevier or Thomson-Reuters in non-transparent processes. Two example of problems are:

- WHAT IS A CITATION? (this is a non-transparent process and no two “authorities” agree

- WHAT IS CITABLE? Again non transparent

As an example of the problem, I was told by the International union of Crystallography that their Acta Crystallographica E is no longer indexed by TR. Now IMO Acta Cryst E is an outstanding journal. It’s the best data journal in the world. The authoring quality is required to be technically very high, the data are reviewed by humans and machines and the result is a semantic publication. But when Acta Cryst asked TR Why? they were apparently told “too many self-citations”.

The point is that a non-answerable commercially-driven organization is making these decisions, without reference to the much larger community of researches and publishers. For me a citation is an objective scholarly object and good software should be able to make independent decisions about it. Self-citations are part of the scholarly record. They may have an important role is establishing quality and credibility of metadata – who knows? It’s possible to filter them out downstream if required. But since TR don’t make available their raw citations (and the reason can only be commercial) we cannot make informed decisions.

So here is my zeroth recommendation for the scholarly community.

-

WE MUST CHALLENGE FOR OUR PUBLIC RIGHT TO OUR INFORMATION

What is “our right” is a political judgment. For me and many others in the Open movement we see the knowledge transmitted to publishers as being owned by the community with the publishers being paid for added value. But most publishers see this as “their property” with an increasing number looking to re-exploit in new ways (such as selling images from publications or charging fees for contentmining). Unfortunately we have had two decades of supine acquiescence to appropriation of rights by publishers and the reclamation of our own property is now much harder than if we had started it in (say) 1995. I have no examples of heads of Universities speaking out and claiming rights to scholarly output (mandates for “Open Access” don’t count as they do not reclaim serious rights.

So here is my first recommendation to the academic community:

- WE MUST CREATE AND CONTROL AN INDEPENDENT RECORD OF METADATA (bibliography and citations) OF SCHOLARLY PUBLICATIONS

That is the most important thing we can do for the first step. We need to know objectively and publicly who published what and who cited what. It’s technically fairly easy to do for modern electronic publications. We and others have software which can crawl publications on the open web, index their bibliographic data and extract the citations. The only problem is that publishers will claim that they “own” the citation data. This claim can have no moral or ethical justification. So the effect is that universities pay large amounts of money for metadata of unknown quality on which they then base their judgments. If Google can index the academic literature so can we. And it need not be expensive.

The key point to realise is that we are in a large, dysfunctional, fragile market.

The elephant seals and Bird-Of-Paradise (http://en.wikipedia.org/wiki/Goldie%27s_Bird-of-paradise)

expend a considerable amount of effort in intraspecies competition (males attracting females). I am not a theoretical biologist but this energy does not help to protect the survival of the species per se (as opposed to the predator-prey (http://en.wikipedia.org/wiki/Predation) process). In fact growing long feathers may be a serious disadvantage when a new predator emerges.

The academic community spends a huge amount of money and effort in intra-research competition. While some of this is healthy it is unclear that the heights we have reached (huge competition to publish in certain journals, huge rejection rates, huge inefficiency) leads to better science. It’s probably neutral. What is clear is that when something changes the evolutionary niche there will be massive disruption. The major places this might come from include:

- New disruptive technology

- New unconventional players in the market (e.g. mobile providers)

- New political pressures (e.g. from funders)

- New attitudes in researchers allied to the Open movement

- Involvement of the citizenry

Because it’s disruptive it is not predictable. However there *are* things academia should be doing anyway and I’ll continue in the next blogs.