Sleepless the bear and #ami2 the kangaroo meet Duck.

S: Hello Duck. What are you doing?

D: I’m helping #ami2 create her commandline parser. We’re going to use ducktyping.

S: what’s a commandline? [*]

Chuff: it’s one of the greatest inventions in computing. (https://en.wikipedia.org/wiki/In_the_Beginning…_Was_the_Command_Line) You type commands that you and the machine both understand. It’s better than GUIs. It interfaces with UNIX tools. #animalgarden will use commandlines for #ami2.

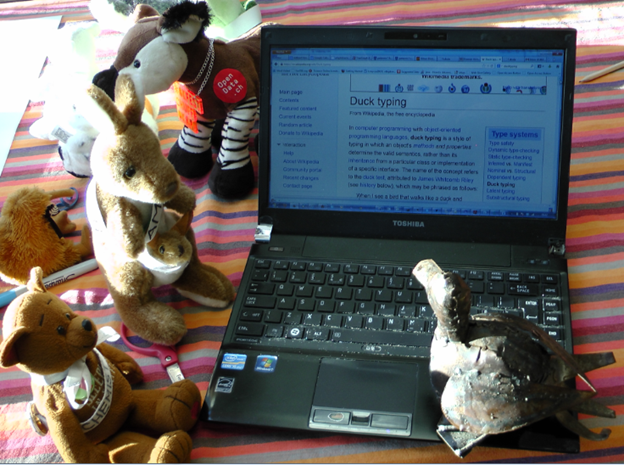

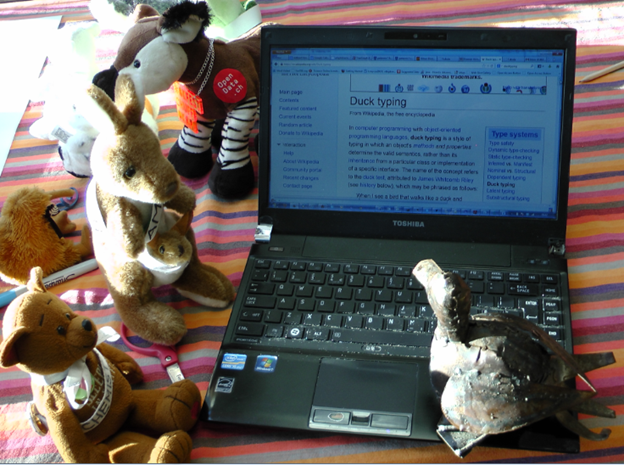

S: so what’s Ducktyping?

D: Let’s look in Wikipedia (https://en.wikipedia.org/wiki/Duck_typing ). “the duck test, attributed to James Whitcomb Riley (see history below), which may be phrased as follows:

When I see a bird that walks like a duck and swims like a duck and quacks like a duck, I call that bird a duck.[1]

I walk like a duck and I quack like a duck so I am a duck.

A: The test is a rule. I can implement rules. Is there more?

D:

In duck typing, one is concerned with just those aspects of an object that are used, rather than with the type of the object itself. For example, in a non-duck-typed language, one can create a function that takes an object of type Duck and calls that object’s walk and quack methods. In a duck-typed language, the equivalent function would take an object of any type and call that object’s walk and quack methods. If the object does not have the methods that are called then the function signals a run-time error. If the object does have the methods, then they are executed no matter the type of the object, evoking the quotation and hence the name of this form of typing.

Duck typing is aided by habitually not testing for the type of arguments in method and function bodies, relying on documentation, clear code and testing to ensure correct use.

S: So why are we using it?

D: Humans don’t like complicated commandlines, so we’ll make it very simple for them. The commandline has to work out what they want?

S: You mean guess?

A: No. I am not allowed to guess. I have to have precise rules.

D: Exactly. So here’s the problem. #ami2 can run over many different types of object. If we had commandline options for all of them the humans would forget them and muddle them. We’ll do the hard work. First the command itself. #ami has lots of things it can do, but if we write something like:

java –jar org.xmlcml.xhtml2stm.species.SpeciesVisitor

the humans will mistype it or get bored and never even try. So we’ll replace this with a script:

species

S: Isn’t that a lot of work for PMR and #ami2?

A: No. Mark Williamson showed us how to use maven to do it automatically. It’s already in the POM file.

D: Now we come to the ducktyping. Let’s say we ask a human to type:

species –-input ducks.html –-inputFormat HTML

half of them will mistype it, half will forget the “–inputFormat” and half will get bored. (The numbers add up since some humans will make TWO mistakes). So we use ducktyping to work out what they want.

species –i ducks.html

S: “-i”??

D: Some humans don’t like long words so we give them two options (–input and –i). It’s hardly any more work for PMR.

Chuff: So how do we know it’s an HTML file?

D: we use https://en.wikipedia.org/wiki/Convention_over_configuration . We assume that the suffix “.html” means it’s an HTML file.

S: Suppose it isn’t?

D: Then the job fails.

Tasmanian Devil: Serves them right. Devil will drag them off to hell.

S: No, we like to think humans can learn gradually. We’ll try to fail gracefully. We can ask the file what type it thinks it is.

D: Many good file formats have a magic number that tells you what they are. XML files should know what namespace(s) they have. For example I could say “this XML file contains Chemistry (CML) and Maths (MathML) embedded in XHTML.

S: Wow. And are you implementing this, #ami?

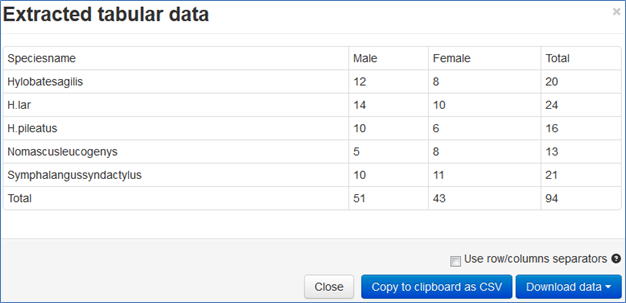

A: PMR said he might. At present there are the following input types:

- HTML (from the publisher’s site)

- HTML from #ami2

- PDF

- XML from publisher

- XML from PubMed (NLM)

- SVG (from #ami)

- Text

- DOI

And lists or directories of all the above

S: Wow! What a lot to have to manage. Can it go wrong?

D: Yes. If a human points #ami2 at a list of holiday photos we won’t get much sense out.

Chuff: If we want people to use it we have to assume they will goof up whenever they can. We have to do almost all the work.

D: But when it’s working, then it’s easy for all of us.

S: So are there any optional options?

D: Yes. For example where we have a directory with many files in, we might want to filter those we want. For example:

species –i ducks/ –inputFormat xml htm html

will look for files of the form

ducks/*.xml ducks/*.htm ducks/*.html

S: So presumably the bored ones can just type “-if”?

D: Yes.

OWL: What happens if the directory contains other directories?

D: What a smart question!

OWL: I am the semantic OWL after all.

D: We have an option for recursion through the directories.

S: “Recursion”??

D: One of the most powerful and beautiful concepts in software (https://en.wikipedia.org/wiki/Recursion ). You ask the method to invoke itself (either directly or implicitly).

S: Won’t that go on for ever.

D: If someone’s made a mistake, yes. Then you get a Stack Overflow …

Devil: Or I cart you off to hell.

D: … but good programs trap this. #ami will use the (–r –recursive) flag. If present it will go through all directories.

S: So I can write:

species –i notebook/ -r –if html pdf

D: exactly. The “/” tells us it has to be a directory. And if you type:

species –i / -r –if html pdf

it will traverse all your disk…

Devil: Unless I carry you off before that.

S: OK. What about output.

Duck: we need you to specify it. If you omit it it would either have to use the same place each time (overwriting) or use the input as a template. We might do that later. But at present we write:

species –i ducks/ html –o my/results/species.xml

and the results go to a named file.

S: Isn’t it too complicated for humans to work out where to put their results?

D: We try to make it easy for them. But at some stage a scientist has to work out what they are doing…

Devil … because if they don’t, I’ll cart them off…

All:TO HELL.

[*] I was asked this question by an informatics student [no names or places] halfway through their PhD. People just assume button pressing will solve everything.