The Island of Skye has the most dramatic mountains in the UK, and Sgurr Nan Gillean is one of the most visible and well known. For me the Pinnacle Ridge (left) [1] epitomises the reality of computing.

There is an often quoted Gartner’s_Hype_Cycle for technology with a graph like this (not Open). After the

- Technology Trigger comes the

- Peak of Inflated Expectations then

- Trough of Disillusionment,

- Slope of Enlightenment and

- Plateau of Productivity.

Great fun for consultants. I sometimes even believe it. However our software projects usually behave like this.

- The idea. The lash-up demo. Everything works. Everything moves fast…

- The first public wonder demo. People are wowed…

- Everything breaks. Upgrades in the browser kill the demo. Everything takes 10 times longer than it used to. It really does.

- The endless fractal Ridge of Refactoring…

- …and occasionally a stunning view through the clouds

What is refactoring? A metaphor might be maintaining a garden (weeds, pruning, all the seeds of decay). Lots of work goes in but little seems to change. Sometimes (as when parts are ploughed up) it seems to go backwards. Refactoring is making your code better without having anything new to show people. It’s time-consuming, often solitary, and filled with local troughs of disillusion. Frequently you go downhill and when you have climbed the nest peak you are only a little higher than before. But it is a little higher

Here’s a simple example. I want to print some lines to the output (don’t worry about the Java, the idea should be clear)

String[] ss;

...

for (int i = 0; i < l.length; i++)

System.out.println(ss[i]);

This code goes through each string by incrementing the index i and printing it. I have written thousands of little loops like this. It’s not wrong, but it’s not as good as it good as it could be. Why?

- I haven’t delimited the loop (without {} it is easy to get edit loop structures wrong).

- I might edit the code and change the value of i within the loop.

- one of the entries in the array might be null

and several other ways of introducing time-bombs

So I edit it:

List moleculeNameList;

...

for (String moleculeName : moleculeNameList) {

CMLUtil.output(moleculeName);

}

I haven’t changed the functionality of the code – my colleagues won’t notice anything different. I might have had to make many hundreds of edits like this (“blood on the floor at midnight”). There will be many times when I am disilllusions – the code no longer works. Often I feel like a hermit crab who has discarded its safe shell and hasn’t yet found another. I can’t go to sleep until it’s fixed. I don’t know how long that will be. Programmers make mistakes at 0300. There is fog all around (this is very common in Skye and in the Cuiilins the compas points in the wrong directions).

Finally it all compiles. And the Unit tests (more later) run. I get the green bar. Now I can go to sleep (or at least to bed).

So what is better, and why did I refactor? Simply, the code is easier to read and less likely to break. I can output the result to somewhere other than the screen. If someone in the Blue Obelisk or elsewhere wants to re-use it it’s easier and quicker. It’s close to a community component. And that is what we are striving for.

The good news is that Refactoring is very well appreciated in the community. Eclipse has special functions for refactoring. With a little investment in time it can go through all your code and make simple changes (rather like this one) on hundreds of instances without making a mistake. Now that’s productivity!

[1] See http://en.wikipedia.org/wiki/Image:Pinnacle_ridge%26gillean2.jpg for authorship and (Open) copyright of image

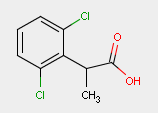

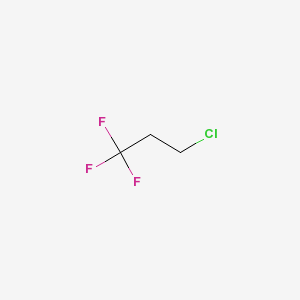

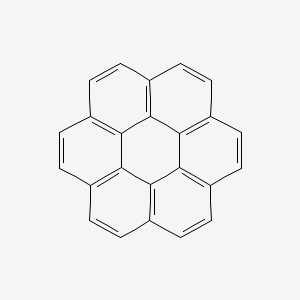

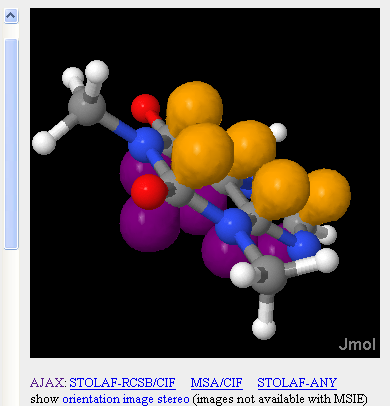

Molecular Weight: 132.512 g/molMolecular Formula: C3H4ClF3Coronene:

Molecular Weight: 132.512 g/molMolecular Formula: C3H4ClF3Coronene:

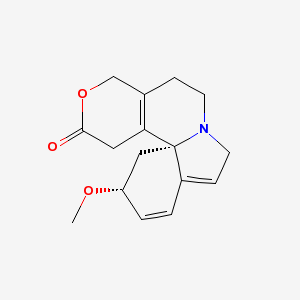

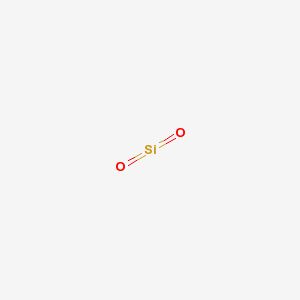

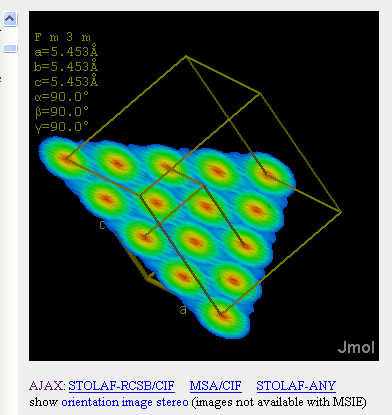

Molecular Weight: 60.0843 g/molMolecular Formula: O2Si

Molecular Weight: 60.0843 g/molMolecular Formula: O2Si

October 4th, 2006 at 9:50 am eI can think of several reasons for this, Peter. I downloaded OpenBabel some time ago, wrote a interface layer (which treats C++ object instances as handles), built a DLL from the source using MinGW and wrote a wrapper for Delphi around the whole thing. It was very much a rush job and I have never gone back to clean it up. It was a quick hack but it kind of worked enough for what we were trying to do at the time (doing SMILESMOLFILE conversion driven from an Access database). In my opinion, there’s an ethical issue in contributing code which you know to be sub-standard and have neither the time nor inclination to redact.