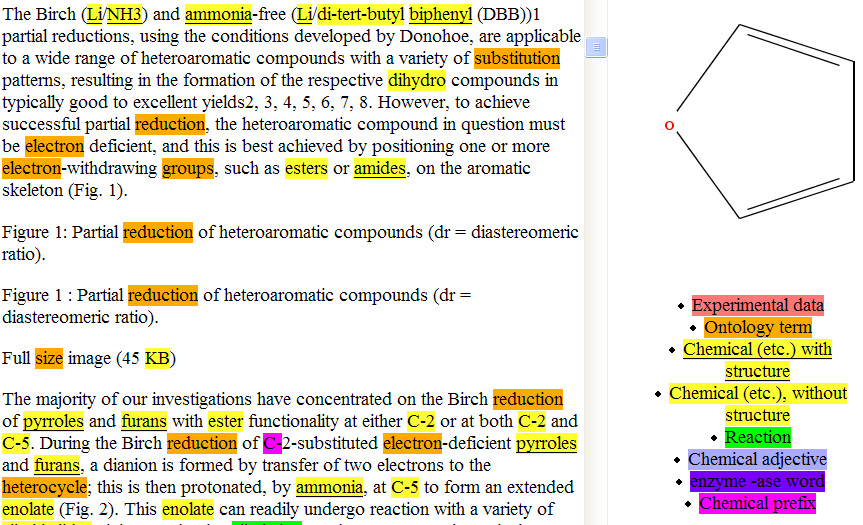

I am delighted to congratulate the Royal Society of Chemistry on their award for Project Prospect. Prospect is one of the first examples of true semantic publishing. We’re pleased to have been closely involved – 5 years ago David James and Alan McNaught of RSC funded two summer students, Fraser Norton and Joe Townsend, to look at creating a tool to check data in RSC publications.

It was a boring job – they had to read ove a hundred papers and tabulate things like how the authors recorded the Molecular Weight, analytical data, etc. The RSC gave guidelines to which authors would conform, which of course they did (about 5% of the time!). “MP”, “MPt.” Melting Point”, “M.P.” shows the sort of variation. So OSCAR-1 had complex regular expressions (tools to search for variable lexical forms) to check the chemistry.

Next year Joe was joined by Chris Waudby [*** AND SAM ADAMS ***] [1] and together they built a large and very impressive Java framework for reading documents and extracting the data. This tool, runing perfectly after 5 years with zero maintenance, is

mounted on the RSC site and has been used by hundreds or thousands of authors and reviewers.In the same summer, Timo Hannay from Nature Publishing Group funded Vanessa deSousa who created Nessie. Nessie used OSCAR tools to find likely chemical names in text and then search for them in a local lexison downloaded from the NIH/NCI database. Where this failed, Nessie would search sites from chemical suppliers through the ChemExper website, often reaching recall rates of ca 60%. This was an impressive case of gluing together several components.

These successes became widely known and as a result we were able to collaborate with Ann Copestake and Simone Teufel in the Computer Laboratory and get a grant for SciBorg, the chemist’s amanuensis. The idea is – inter alia – to have a chemically intelligent desktop which “understands” chemical natural language sufficiently to add meaning for readers of documents. The RSC continued to sponsor us in partnership with Nature and the International Union of Crystallography.

Peter Corbett joined us on the projects and has taken the previous OSCARs and created OSCAR3, which can now recognise chemical terms in over 80% of cases. (I must be more precise; given a corpus, OSCAR3 will recognise which words or phrases conform to a 30-page definition of chemical language with a combined precision-recall of that magnitude. I do not believe there is a superior tool when measured like this. It will be able to provide a connection table with rather lower frequency – depending partly on the specificity or generality of the language.)

Last summer Richard Marsh and Justin Davies – sponsored by RSC and Unilever – returned to the data checking aspect of OSCAR and created the prototype of a semantic chemical authoring tool. This can read a paper and flag up many types of error, and also help authors create data where certain types of error are impossible. The next phase of this is evolving and we’ll tell you more shortly.

We have frequent contact with the RSC, particularly of Richard Kidd and Colin Batchelor who visits frequently. They have done much of the design and implementation of Prospect and it has required a significant effort and investment.

But it’s worth it. The data quality is higher, the ease of understanding and searching is enhanced. It’s easier to turn into semantic content. There’s a way to go yet – we need to address reactions, and data with scientific units of measurement but it starts to look easier.

After all there is probably another prize to be won next year…

[Note: I later overwrote this by mistake after publishing but found it on Planet Scifoo. Saved from having to rekey for the scholarly record!]

[1] SAM, I AM VERY SORRY FOR MISSING YOU OUT IN THE FIRST DRAFT. p