#scholarlyhtml

We are gearing up for the weekend scholarly hackfest in Cambridge. Like all hackfests it is organised chaos. But we are assembling a range of top-class creators. They include:

- Peter Sefton (USQ, ICE, HTML)

- Martin Fenner (Hannover, WordPress)

- Brian McMahon (Int. Union of Crystallography – publishing, validation, dictionaries…)

- Mark MacGillivray (Edinburgh, Open bibliography)

- Dan Hagon (ace hacker)

- JISC (Simon Hodson will be here on Friday)

- PM-R group (Sam Adams, Joe Townsend, Nick England, David Jessop, Lezan Hawizy, Brian Brooks, PM-R, Daniel Lowe) Lensfield, JUMBO, OSCAR, Chemical Tagger, etc…)

So far the following themes are emerging:

- Data publication. How do we take a semantic data object and publish it? Currently we are looking at chemistry (crystallography and compchem) and general scientific numeric data

- Bibliography. How can we regain control of bibliography. WE authors need the tools to create what WE want to say – not to have to waste time creating something that sucks (“Harvard style” rather than BibTEX) and whose sole purpose is to save the publishers money.

- A general flexible authoring platform under our control.

Here’s Martin http://blogs.plos.org/mfenner/2011/03/07/the-trouble-with-bibliographies/. Some excerpts…

Unfortunately allmost all bibliographies are in the wrong format. What you want is at least a direct link to the cited work using the DOI (if available), and a lot of journals do that. You don’t want to have a link to PubMed using the PubMed ID as the only option (as in PubMed Central), as this requires a few more mouseclicks to get to the fulltext article. And you don’t want to go to an extra page, then use a link to search the PubMed database, and then use a few more mouseclicks to get to the fulltext article (something that could happen to you with a PLoS journal).

A bibliography should really be made available in a downloadable format such as BibTeX. Unfortunately journal publishers – including Open Access publishers – in most cases don’t see that they can provide a lot of value here without too much extra work. One of the few publishers offering this service is BioMed Central – feel free to mention other journals that do the same in the comments.

And

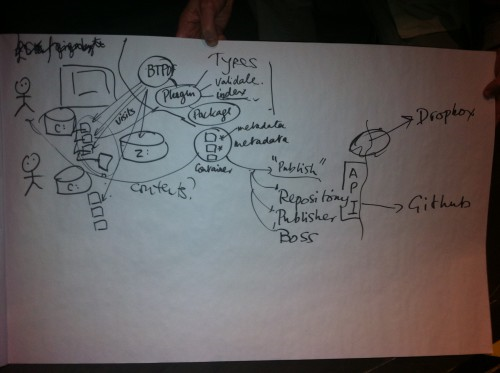

My idea for the hackfest is a tool that extracts all links (references and weblinks) out of a HTML document (or URL) and creates a bibliography. The generated bibliography should be both in HTML (using the Citation Style Language ) and BibTex formats, and should ideally also support the Citation Typing Ontology (CiTO) and COinS – a standard to embed bibliographic metadata in HTML. I will use PHP as a programming language and will try to build both a generic tool and something that can work as a WordPress plugin. Obviously I will not start from scratch, but will reuse several alrady existing libraries. Any feedback or help for this project is much appreciated.

If I had a tool with which I could create my own bibliographies (and in the formats I want), I would no longer care so much about journals not offering this service. One big problem would still persist, and that is that most subscription journals wouldn’t allow the redistrubition of the bibliographies to their papers. A single citation can’t have a copyright, but a compilation of citations can. I’m sure we will also discuss this topic at the workshop, as Peter Murray-Rust is one of the biggest proponents of Open Bibliographic Data.

We are able to support this through an EPSRC “follow-up” grant – Pathways to Impact – whose purpose is to disseminate what we have already achieved. This hackfest builds on OSCAR and several JISC projects (who are also supporting some of the group at Cambridge).