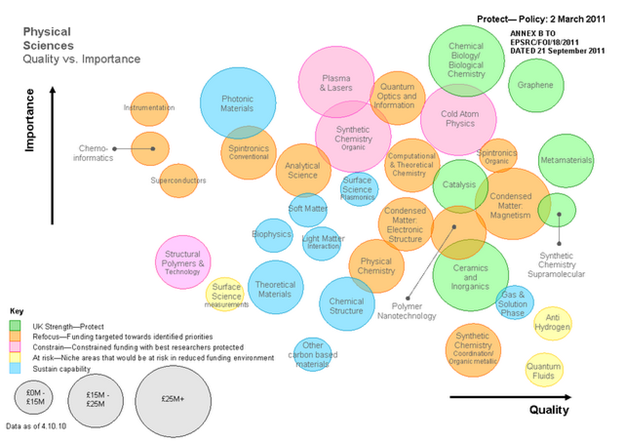

I blogged yesterday (/pmr/2011/09/27/the-clarke-bourne-discontinuity/ ) about Paul Clarke’s exposure (http://shear-lunacy.blogspot.com/2011/09/bourne-incredulity.html ) of the EPSRC “decision making” process which appears to use a 2-plot of “quality” vs “importance”. It has now seriously upset me. It needs the spotlight of Openness turned upon it.

Why is it so upsetting?

-

It appears to be used as an automatic method of assessing the fundability of research proposals. This in itself is highly worrying. Everyone agrees that assessing research is hard and inexact. Great scientists and scientific ideas are often missed, especially when they challenge orthodoxy. Bad or (more commonly) mediocre science is funded because it’s in fashion. “Safe” is often more attractive than “exciting”. The only acceptable way is to ask other scientists (“peers”) to assess scientific proposals, which we do without payment as we know it is the bedrock of acceptable practice. There is not, and never will be (until humans are displaced by machines) an automatic way of assessing science. Indeed when a publisher rejects or accepts papers without putting them out to peer review most scientists are seriously aggrieved. For a Research Council (which allocates public money) it is completely unacceptable to (not) allocate resources without peer review.

And “peer-review” means by practising scientists, not office apparatchiks.

-

It appears to be completely unscientific. The use of unmeasurable terms such as “importance” and “quality” is unacceptable for a scientific organization. We live with the use of “healthy”, “radiant”, “volume” (of hair), as marketing terms – at best an expression of product satisfaction – at worst dangerously misleading. But “quality”?

It might be possible to use “quality” as a shorthand for some metric. Perhaps the average impact factor (arggh!) of a paper in that discipline. Or the average score given by reviewers in previous grant proposals (urrgh!). Those would indicate the current metric madness, but at least they can be made Open and reproducible. “Importance”? The gross product of industries using the discipline? The number of times the term occurs in popular science magazines? Awful, but at least reproducible.

But this graph, which has no metric axes and no zero point (this is the ENGINEERING and SCIENCE Research Council) breaks high school criteria of acceptability. Suppose the scales run from 99-100? All subjects are ultra important, and all subjects are top quality? Any reviewer would trash this immediately.

I intend to pursue this – in concert with Paul and Prof. Tony Barrett from Imperial College. We have to expose the pseudoscience behind this. (Actually it would make an excellent article for ben Goldacre’s “Bad Science” column in the Guardian). We first have to know the facts.

Paul and Tony have used FOI to get information from the EPSRC. They haven’t yet got any. I have suggested they should use http://whatdotheyknow.com as it records all requests and correspondence.

The EPSRC cannot hide. They are a government body and required to give information. It they try a Sir-Humphrey approach (“muddle and fluff”) we go back with specific questions. And if they fluff those then it can be taken to the Information Commissioner.

The information will definitely come out.

So when it does, what are the bets?

- 1-2: they can’t trace the origin, mumble and fluff

- 1-1: created by someone in the EPSRC office (“from all relevant data”)

- 2-1: copied from some commissioned report (probably private) from “consultants”

- 2-1: a selected panel of experts

- 3-1: created from figures dredged up on industry performance and reviewers (see above)

- 3-1: copied from some other funding body (e.g. NSF)

- 3-1: the field (i.e. some other origin – a dream, throwing darts )

EPSRC: the origins of this monstrosity will be revealed anyway, so it will be best that you make a clean break of it now. You are not the most popular research council – RSC, Royal Society and several others have written to ask you to think again about arbitrary and harmful funding decisions. The longer this goes on the less will be your credibility.

We need to raise the quality of this debate – I’m not saying EPSRC is right but it doesn’t help by distorting what they are actually saying. Let’s make a bit more effort to understand what EPSRC have done, why they have done it, and start arguing on a basis of accurate information. EPSRC have actually been very open about why they are doing this, what the process has been, and what inputs they used to make strategic decisions.

Bourne’s team have actually published quite a detailed explanation on how they made *informed judgements* about the physical sciences portfolio which is represented by the QI plot (just because a diagram isn’t quantifiable doesn’t mean it isn’t representative of something)

Read this and please blog again http://tiny.cc/f66xw

You may be interested in Prof Timothy Gowers’s deconstruction of the EPSRC announcement.

http://gowers.wordpress.com/2011/07/26/a-message-from-our-sponsors/

I’m guessing that they took all of the EPSRC funded papers in chemistry, categorised them, measured “importance” as the average number of citations, and “quality” as the average impact factor of the journal.

This is indeed one model, and I identified it as a possibility. It’s only virtue is that it is mechanical. But the other methods have no virtues!