I am presenting this “talk” from the Web and including parts of my blog. This means I have to decide what I think I am going to say before I do or don’t say it. You know by now what I think of PDF and Powerpoint. This talk is in HTML and can be trivially XMLised robotically. It should be preservable indefinitely.

==== what I intend to cover ====

Data as well as text is now ESSENTIAL – we should stop using “full-text” as it is dangerously destructive in science. “PDF” is an extremely effective way of doing this. We need compound documents (Henry Rzepa and I have coined the term datument).

Need automated, instant, access to and re-use of millions of published digital objects. The Harnad model of self-archiving on individual web pages with copyright retained by publishers is useless for modern robotic science.

Much scientific progress is made from the experiments of others by making connections, simulations, re-interpretation. We need semantic authoring. Librarians must support the complete publication process.

Problems:

- apathy and lack of vision – scientists (especially chemists) need demonstrators before people take us seriously

- restrictive or FUDdy IPR. Enormously destructive of time and effort

- emphasis on visual rendering rather than semantic content. Insidiously dangerous

- broken economic model (anticommons)

Successes:

- Blue Obelisk – communal Open Source (home, license) and Open Data group Jmol . JFDI

- PubChem – (Wikipedia, home page) and pubmed (UKPMC should be exciting)

- SPECTRa : JISC. Collaborative project to reposit chemistry at source.

- Chemical blogosphere

Other inititiatives:

- SPARC – Open Data mailing list

What must be done

- DEVELOP TOOLS FOR AUTHORING, VERSIONING AND DISSEMINATING DATUMENTS. THESE MUST BE IN XML.

- INSIST THAT ALL AUTHORS’ WORKS ARE THEIR COPYRIGHT AND RE-USABLE UNDER COMMONS-LIKE LICENSE (from menu)

- INTRODUCE NEW APPROACHES TO PEER-REVIEW OF COMPLETE WORKS (WITH/WITHOUT “TEXT”). INCLUDE YOUNG PEOPLE AND SOCIAL COMPUTING

- DEVELOP AND USE LOOSELY-CONTROLLED DOMAIN-SPECIFIC VOCABULARIES (cf. microformats).

- PAY PUBLISHERS FOR WHAT ADDED VALUE THEY PROVIDE, NOT WHAT VALUE THEY CONTROL. CREATE A MARKET WHERE PUBLISHERS HAVE TO COMPETE WITH OTHER WAYS OF SOLVING THE PROBLEM (Google, folksonomies, etc.)

=======Previous posts and related blogs======

Open Data – the time has come

Open Source, Open Data and the Science Commons

Is “peer-review” holding back innovation?

Beginnings

Blogging and the chemical semantic web the blogs

My data or our data?

Science Commons

Science Anticommons

Hamburger House of Horrors Horrible GIFS Hamburgers and Cows – the cognitive style of PDF

Thanks (and XML value chain)

The cost of decaying Scientific data

OSCAR – the chemical data reviewer

Linus’ Law and community peer-review

============= Live demos =========

Taverna

OSCAR1 (applet version)

OSCAR3 (local demo)

Crystallography (not yet released)

MACiE

GoogleInChI

DSpace (individual molecule)

chemstyle (needs MSIE)

===== what I actually said ====

Many thanks to William for recording all the talks and I am delighted to have this record made available. (I have not yet discussed copyright but I hope it can go in our repository 🙂

í Kulhánek and Otto Exner, Org. Biomol. Chem., 2006, 4, 2003

í Kulhánek and Otto Exner, Org. Biomol. Chem., 2006, 4, 2003

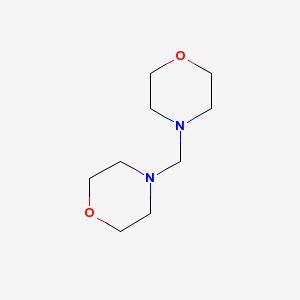

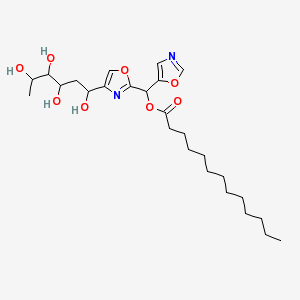

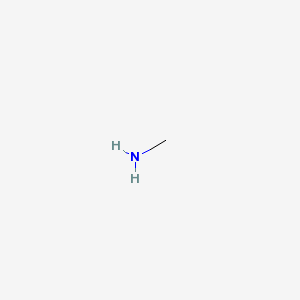

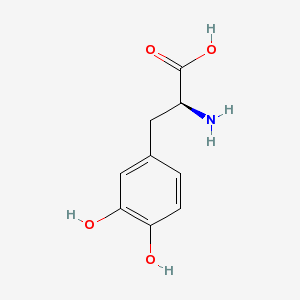

October 14th, 2006 at 7:55 pm eI have not been able to track down all of the involved blogs, but my final guess would be that these molecules are taken from chemical blogs. The first one is from tenderbutton, the last one already recognized by J-C (thanx for the tip!).(Peter, please don’t say I’m wrong…